What is Hitogata? How does the face tracking program work? How can I make MMD facial animation from a video file?

Hitogata Brings Face Tracking to MMD

Hitogata is a new project from Mogg, the same person who created Face and Lips, the VMD Smoothing Tool, and MikuMikuMoving. It is a facial tracking system that aims to take video input and convert it into a VMD file for use in MMD. It also contains features for character creation, live facial tracking, and mouth tracking from volume levels.

Note: Hitogata is currently still under development. This means the program is not yet feature-complete and may have bugs. The information below is accurate as of version 2.21, released on August 12, 2018. (This version is called version 0.3.14 in the program.)

If you would like to see the results of my attempts to use Hitogata, please scroll down to the heading “My Results with Hitogata.”

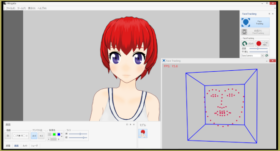

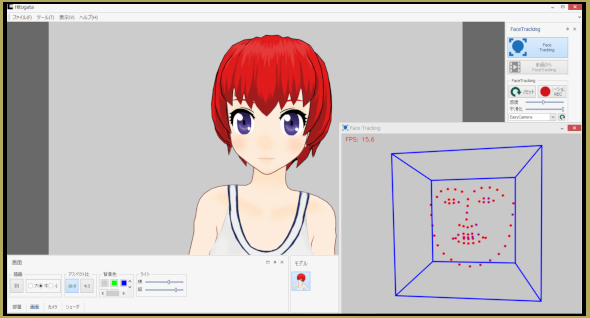

Hitogata’s User Interface

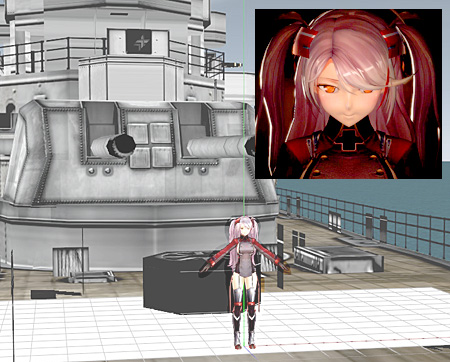

Hitogata will open with a blank, grey canvas and several menus. This is Hitogata’s main screen. Everything important is here. Every menu can be docked in different positions by clicking and dragging them, so you can find a configuration that best suits you. The screenshot here shows everything in its default positions.

The grey canvas is the preview window. This is where you can look at what the face tracker is outputting. Click a character from the character menu to load it onto the preview screen.

On the right side of the screen is the Face Tracking menu. If you have a character selected, you can use the face tracker. The blue button at the top, labelled “Face Tracker”, is the live face tracker. The one directly below that is “track face from a video”. Below that is a set of options for face tracking, including mouth openness and animation smoothing, and options to use Kinect or Neuron Motion Capture. The menus at the bottom of this screen lead to “sound options” and “preset animations”.

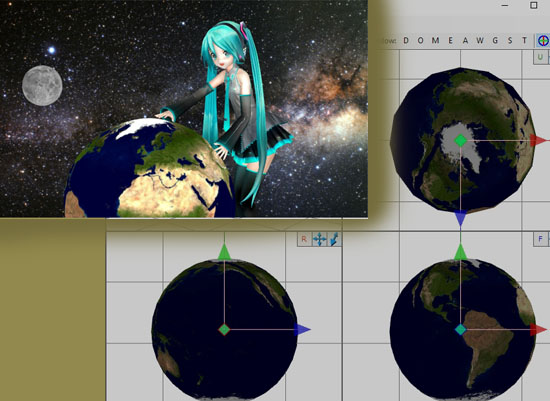

The menu on the bottom left defaults to “Shader.” The options are the default shader, which is meant to look like MMD’s shader, a greener shader, and a toon shader. The option to the left of that is “camera controls”. which gives various options related to the preview window. The one left of “camera controls” is “display options”, which allows you to modify how the windows look. The leftmost menu is “background”, which allows the user to load an object into the background.

Recording Your Face with Hitogata

To record directly from a webcam, click the “Face Tracking” button. This will open a new window, which displays what the face tracker sees. There should be a blue box around your face and dots showing the position of your facial features. If the box is red, and there are no facial tracking markers, this means the tracker is on, but it cannot detect a face. If there is no box or trackers, there may be an issue that is stopping Hitogata from accessing the camera. Check to see if your camera is turned on, if your antivirus software is blocking Hitogata from accessing your camera, and that no other application is trying to access your camera.

Using the “Reset” button on the main menu will reset the model’s position relative to the current tracked position. You should initialize the tracking when you are facing forward with your mouth closed and neutral and the eyes open and looking at the camera. This will cause the model to match the intended movement. If the recording starts in a different position, it could have issues with correctly tracking the movement.

Pressing “record” on the facial tracking window will open a box asking where you would like to save the video file Hitogata creates. It defaults to an MP4 file, though you can also use DVX and WMV1 filetypes. Once the video is saved, the file will begin recording a video and audio file.

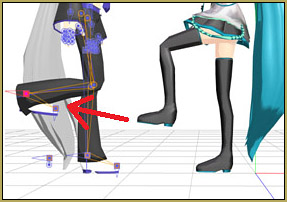

Face Tracking with Hitogata

Pressing “record” on the main window will record a VMD file if the facial tracking window is open. It will record a file in real time. Clicking stop will end the video recording. This will not create an audio file, just a VMD file.

To record facial tracking from an existing video file, click “face tracking from video file”, which is the button that looks like a movie clip. This accepts MP4, AVI, and WMV files. Once a file has been loaded, click “record” on the main window to activate tracking. Press “play” in the video viewer to track through the video file. When the video file is complete, click “stop” in the main window to end tracking.

Consider playing with the sensitivity options to find something that works for you.

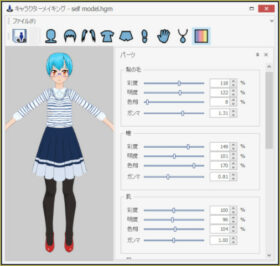

Hitogata’s Character Creator

Hitogata uses its own character file format, HitogataModel. This means that PMX and PMD files cannot be used with Hitogata, and Hitogata files cannot be used in MMD. Hitogata can also open FBX, Metasequoia, and X files.

To open the character creator, click the top option in the “Tools (T)” menu. This will open a menu with a series of options for customizing your character and their clothing. Most of the options have sliders that allow for additional modification of the character model. This character creator is currently fairly limited, but Mogg adds more items with each update. Saved character models will appear in the “character” menu.

Issues with Hitogata’s August 14, 2018 Build

This is not the finished version of Hitogata. This means there are bugs, missing features, and instability.

When a user opens the webcam after live face tracking was opened, they will get an error. This is because the program doesn’t seem to properly disconnect from the webcam unless it is completely closed.

Some issues with Hitogata are applicable to almost any program that tracks facial features. Facial hair that covers the mouth and strong glasses prescriptions can confuse the trackers, giving poor results.

Mogg has acknowledged that the face tracking system can have trouble detecting people with very dark skin tones.

The face tracker can be quite jittery for me. Even when giving it a still video frame, the tracking dots moved. This jittering most often affected the mouth and body position. Sometimes the mouth trackers detected mouth movement when the mouth was closed. Adjusting the smoothing can help improve the results, but it’s not perfect.

There appears to be a bug in the current build of Hitogata that causes VMD exports to have incorrect timing when the FPS is low. This turned a clip that was originally seven seconds long into a 33-second animation. It matched the original clip if it was sped up four times. This could be an issue with my computer not being as strong as it expects, which affects the processing of the VMD file. Using MMD’s “expand” tool, you can make the animation match.

Sometimes, the text in menus is cut off. It’s still legible if you can read Japanese, but if you’re like me and are using Google Translate to figure out where options are, it’s tricky. This will probably be fixed.

My Results with Hitogata

Despite the issues mentioned above, I was able to create a decent video with Hitogata. I decided to demonstrate it with Bohemian Rhapsody. I sped up and slowed down the clips so they were all the same length.

Currently, I think Hitogata is a good base for tracking facial expressions even if it can be fiddly at times. If you can get the fps on the face tracking system high enough, it could be a practical option for tracking the head and upper body as well. I’m going to keep experimenting with it to see if I can get better results.

Hitogata can be downloaded at its web page. If you find a bug, e-mail Mogg at the e-mail address found there.

– SEE BELOW for MORE MMD TUTORIALS…

— — —

– _ — –

Hey, it updated and I can’t read any of it

Which button is the face track one?

I can’t seem to load the model i created in the character creator part in the program

I figured out how to work it but when i try face tracking the camera thinks im leaning but I’m not

The program will consider the position it captures when you open the “tracking” menu or click “reset” to be the default position. Have you tried sitting as straight as possible with your face in the middle of the frame?